Wednesday, August 04, 2010

Tuesday, May 12, 2009

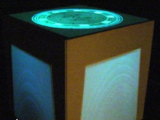

Final ReacTable Mock-Up

Below is a mock up of what my ReacTable table will look like. I have the Macbook on the bottom shelf which powers the whole experiment through using ReacTIVision software and PurData. Pure data is a programming language which can read MIDI files via Open Sound Control which is the main audio signal for my ReacTable. The ReacTIVision software is programmed to recognise the Fiducials on the top of the table, when these are moved or rotated through user interaction, PureData distorts the sound through changing the level of the Sin wave. Therefore creating a rather flexible interface for a number of users to use at the same time and create a piece of music. This is exactly what I wanted to make as an example of interaction without the need for the spoken language, opening barriers and crossing boundaries for people all over the world.

Wednesday, May 06, 2009

Sound and Reactivision

After days of struggling to get the sound working with ReacTIVision, XML and Ableton Live I finally decided to use PureData and Reactivision to get the fiducials to link the sound to a Pure Data with help from Musa's blog

This proved to be much easier than using ableton as this send a message to the open sound source to play an oscillator sound so when the fiducials are rotates the sin wave increases.

Now I will have to make my own smaller version of the ReacTable to use to discover whether the table brings people together to make a song with out spoken communication but purely through interacting together and using the senses.

Composition on the Table

Four white tables have various user interfaces such as switches, dials, turn-tables and sliding boards that a player can touch. Projectors suspended from the ceiling project computer generated images onto the tables and interfaces. Projected images change in real time as if they were physically attached to the interfaces when players operate them. Also sounds are produced in relation to the movement of images.

Jam-o-drum

By combining velocity sensitive input devices and computer graphics imagery into an integrated tabletop surface, six to twelve simultaneous players are able to participate in a collaborative approach to musical improvisation. The Jam-O-Drum was designed to support face-to-face audio and visual collaboration by playing on drum pads embedded in the surface to create rhythmical music and effect visual changes together using the community drum circle as a metaphor to guide the form and content of the interaction design. The Jam-O-Drum is a permanent exhibit in the heart of Sound Lab at the EMP.

FTIR Musical Application

A musical interface for a large scale, high-resolution, multi-touch display surface based on frustrated total internal reflection. Multi-touch sensors permit the user fully bi-manual operation as well as chording gestures, offering the potential for great input expression. Such devices also inherently accommodate multiple users, which makes them especially useful for larger interaction scenarios such as interactive tables.

AudioTouch

AudioTouch is an interactive multi-touch interface for computer music exploration and collaboration. The interface features audio based applications that make use of multiple users and simultaneous touch inputs on a single multi-touch screen. A natural user interface where users can interact through gestures and movements, while directly manipulating musical objects, is a key aspect of the design; the goal is to be able to interact with the technology (specifically music based) in a natural way. The AudioTouch OS consists of four main musical applications: MultiKey, MusicalSquares, Audioshape sequencer & Musical Wong

MUSICtable

An ubiquitous system that utilizes spatial visualization to support exploration and social interaction with a large music collection. The interface is based on the interaction semantic of influence, which allows users to affect and control the mood of music being played without the need to select a set of specific songs.

realsound

An interactive installation combining image and sound through a tangible interface. By operating 24 buttons in on the table top he visitors can create an audio-visual composition of their own design. Each button has its own unique sound that is linked to an image.

This proved to be much easier than using ableton as this send a message to the open sound source to play an oscillator sound so when the fiducials are rotates the sin wave increases.

Now I will have to make my own smaller version of the ReacTable to use to discover whether the table brings people together to make a song with out spoken communication but purely through interacting together and using the senses.

Composition on the Table

Four white tables have various user interfaces such as switches, dials, turn-tables and sliding boards that a player can touch. Projectors suspended from the ceiling project computer generated images onto the tables and interfaces. Projected images change in real time as if they were physically attached to the interfaces when players operate them. Also sounds are produced in relation to the movement of images.

Jam-o-drum

By combining velocity sensitive input devices and computer graphics imagery into an integrated tabletop surface, six to twelve simultaneous players are able to participate in a collaborative approach to musical improvisation. The Jam-O-Drum was designed to support face-to-face audio and visual collaboration by playing on drum pads embedded in the surface to create rhythmical music and effect visual changes together using the community drum circle as a metaphor to guide the form and content of the interaction design. The Jam-O-Drum is a permanent exhibit in the heart of Sound Lab at the EMP.

FTIR Musical Application

A musical interface for a large scale, high-resolution, multi-touch display surface based on frustrated total internal reflection. Multi-touch sensors permit the user fully bi-manual operation as well as chording gestures, offering the potential for great input expression. Such devices also inherently accommodate multiple users, which makes them especially useful for larger interaction scenarios such as interactive tables.

AudioTouch

AudioTouch is an interactive multi-touch interface for computer music exploration and collaboration. The interface features audio based applications that make use of multiple users and simultaneous touch inputs on a single multi-touch screen. A natural user interface where users can interact through gestures and movements, while directly manipulating musical objects, is a key aspect of the design; the goal is to be able to interact with the technology (specifically music based) in a natural way. The AudioTouch OS consists of four main musical applications: MultiKey, MusicalSquares, Audioshape sequencer & Musical Wong

MUSICtable

An ubiquitous system that utilizes spatial visualization to support exploration and social interaction with a large music collection. The interface is based on the interaction semantic of influence, which allows users to affect and control the mood of music being played without the need to select a set of specific songs.

realsound

An interactive installation combining image and sound through a tangible interface. By operating 24 buttons in on the table top he visitors can create an audio-visual composition of their own design. Each button has its own unique sound that is linked to an image.

Wednesday, April 29, 2009

Reactivision

I started to make my own interactive music player today. The aim for this final artefact is to design an interface which overcomes the language barrier between cultures. To do this I used a webcam, ReacTIVision 1.4, Flash and actionscript.

The web cam was put on to a clamp and then fiducial markers were laid out in front of the camera. The camera is connected to the application ReacTIVision 1.4, which is programmed to track these markers and enable multi-touch finger tracking.

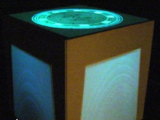

Below is an example of the Flash interface which uses the TUIO protocol to connect to the ReacTIVision application.

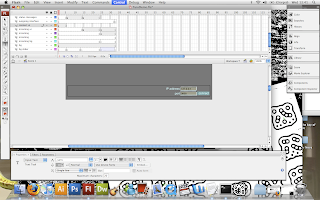

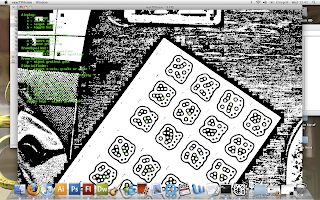

Below is an example of the fiducial markers seen through the web cam. On a closer look the markers are labelled on paper, and the green numbers show the application has recognised markers. The green text on the left demonstrates the short cuts that can be used within the application.

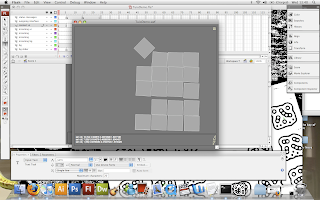

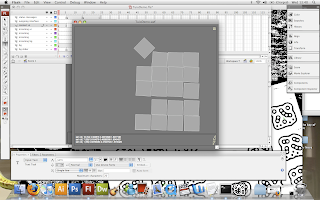

This is an example of what can be seen in flash when the markers are moved. They are represented as grey boxes and track the markers where ever they move. At the moment when they are moved the hand will interrupt the signal, so it the camera would need to be below the table and lit by an LED light.

So now, the sensors are set up and working. The next step is to research how to get the sound samples working with this. The sound can be added through changing the .xml code in the reativision application to work in MIDI mode. Used in conjuction with Abelton Live or Reaktor music technology software the sound will play when the marker is moved by the user.

The web cam was put on to a clamp and then fiducial markers were laid out in front of the camera. The camera is connected to the application ReacTIVision 1.4, which is programmed to track these markers and enable multi-touch finger tracking.

Below is an example of the Flash interface which uses the TUIO protocol to connect to the ReacTIVision application.

Below is an example of the fiducial markers seen through the web cam. On a closer look the markers are labelled on paper, and the green numbers show the application has recognised markers. The green text on the left demonstrates the short cuts that can be used within the application.

This is an example of what can be seen in flash when the markers are moved. They are represented as grey boxes and track the markers where ever they move. At the moment when they are moved the hand will interrupt the signal, so it the camera would need to be below the table and lit by an LED light.

So now, the sensors are set up and working. The next step is to research how to get the sound samples working with this. The sound can be added through changing the .xml code in the reativision application to work in MIDI mode. Used in conjuction with Abelton Live or Reaktor music technology software the sound will play when the marker is moved by the user.

Tuesday, April 28, 2009

Artefact 6: ReacTable

After watching this at Ars Electronica and filming this as an example i wanted to create something with this in mind. The experience was truly inspiring, a group of people that had never met before, and that all spoke different languages managed to connect through interaction with the ReacTable installation by Sergi Jordà, Marcos Alonso, Günter Geiger and Martin Kaltenbrunner www.reactable.com.

This diagram explains more clearly how the table works:

And these are the different image tags that the camera sensors:

Thursday, April 02, 2009

Wednesday, March 25, 2009

Title Sequence

For the title sequence I could go all out and do some fancy sequence, although I don't feel as if this would benefit the film in any way. I would like to keep the intro short and simple, with no deterring the eyes as the film to come required a great deal of movement at such a fast pace, i don't want the viewers to get too fed up before the film has already begun.

This is a simple outline for the template of my credits which I will be producing in Live Type. So far this is the best animation/motion graphics programme I have found to do simple credits in, and i can easily export the files as .mov's and import them straight into final cut pro.

Here is the first draft for the title sequence, however I altered this afterwards to include my name at the beginning.

The video below is a quick example of the scrolling credits that I will use at the end of the film. Again, not too exciting or eye catching but it serves its purpose and is simple looking, as not to deter from the look of the timelapse film.

This is a simple outline for the template of my credits which I will be producing in Live Type. So far this is the best animation/motion graphics programme I have found to do simple credits in, and i can easily export the files as .mov's and import them straight into final cut pro.

Here is the first draft for the title sequence, however I altered this afterwards to include my name at the beginning.

The video below is a quick example of the scrolling credits that I will use at the end of the film. Again, not too exciting or eye catching but it serves its purpose and is simple looking, as not to deter from the look of the timelapse film.

Subscribe to:

Posts (Atom)